Scaling Nostr -- by Sondre Bjellas:

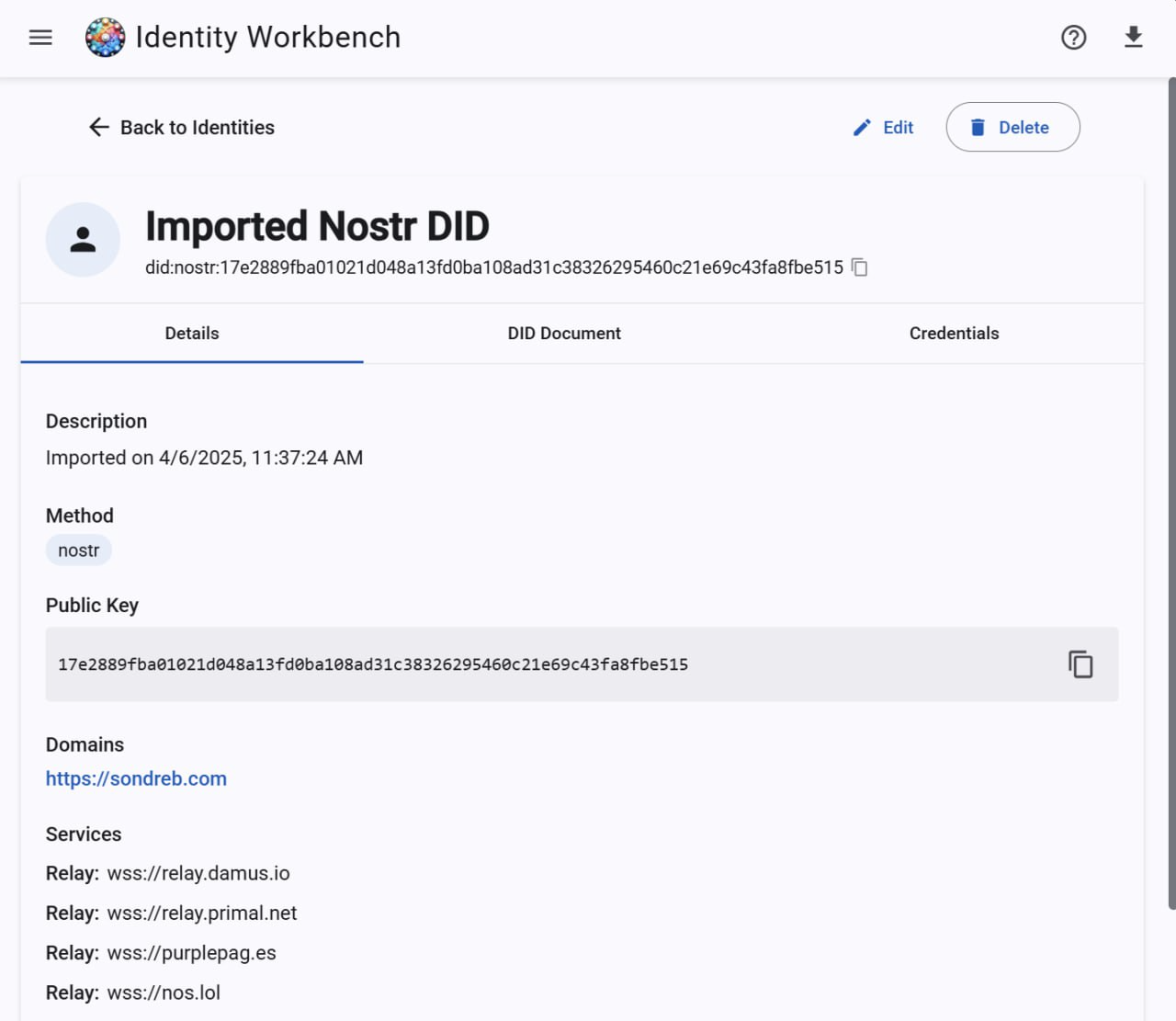

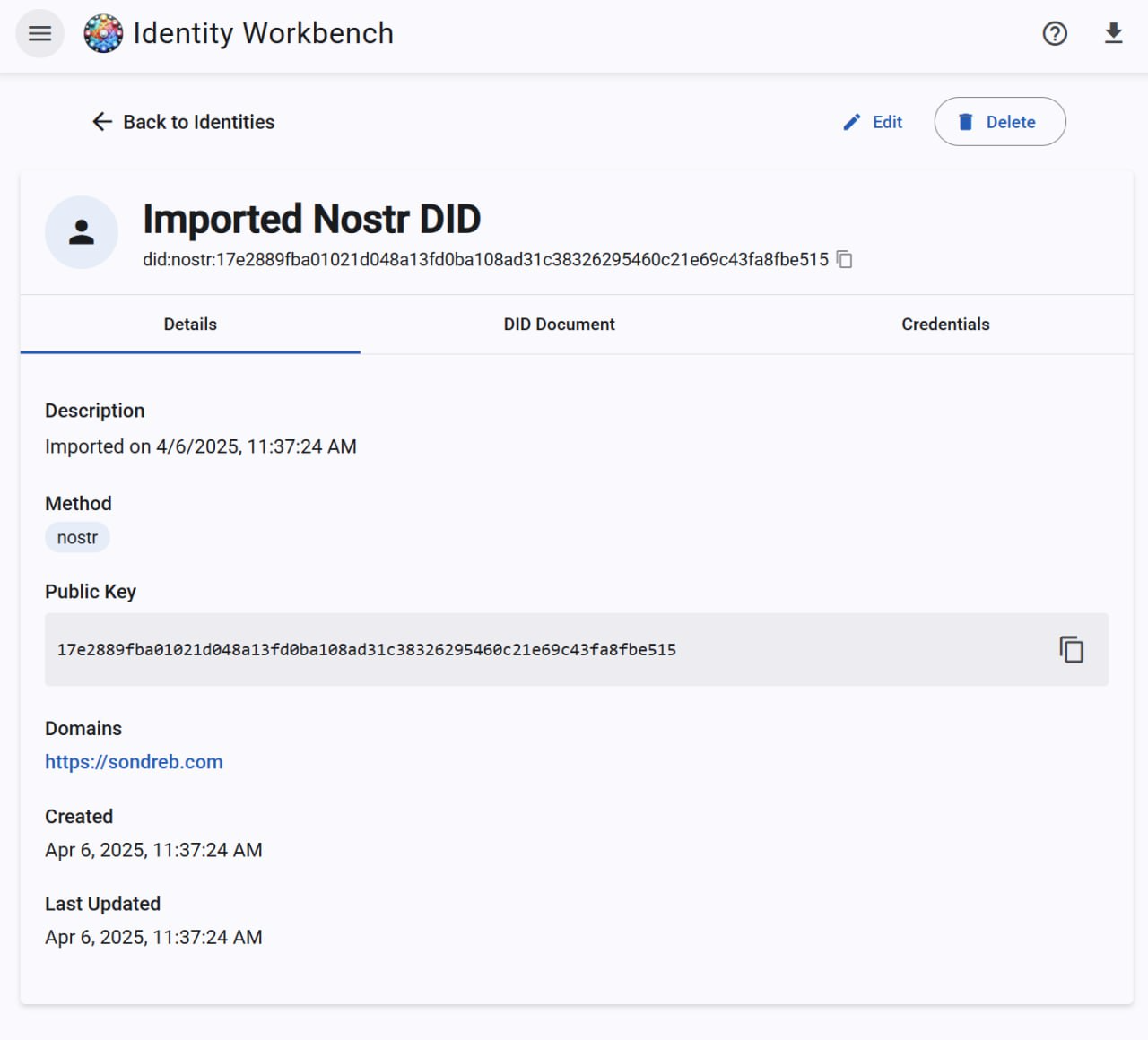

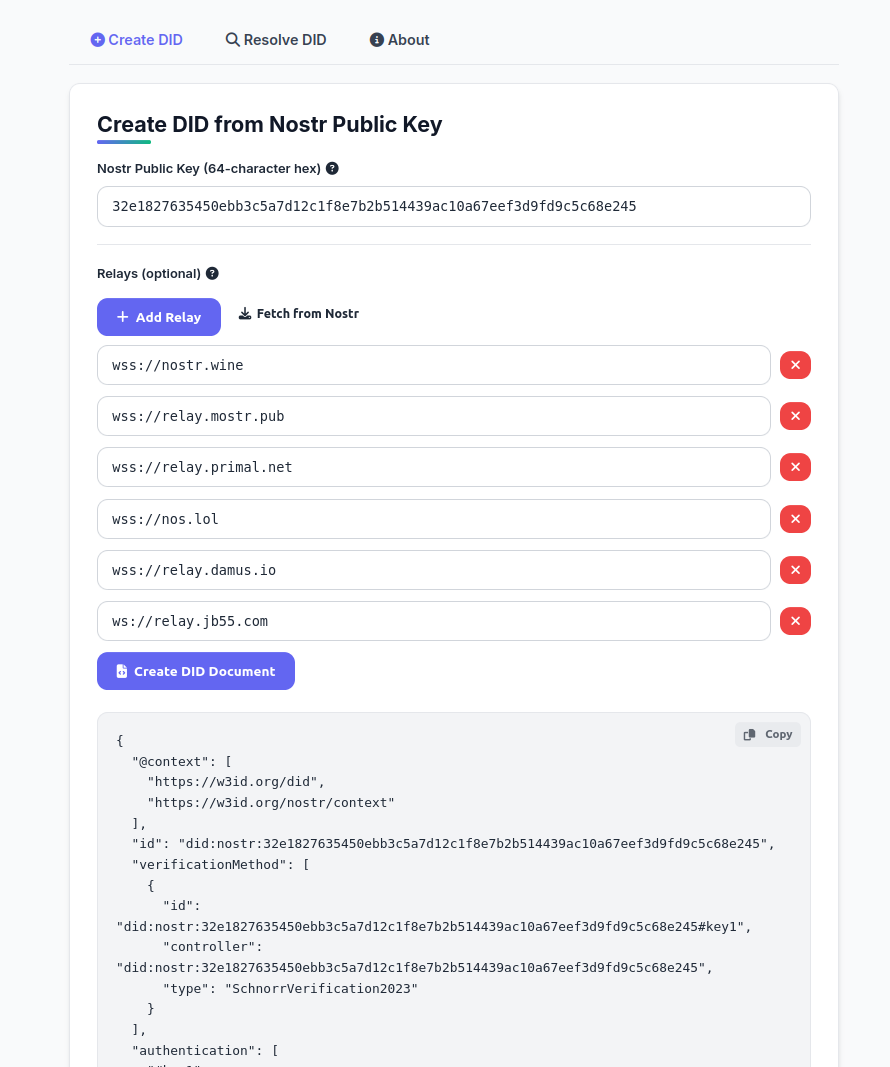

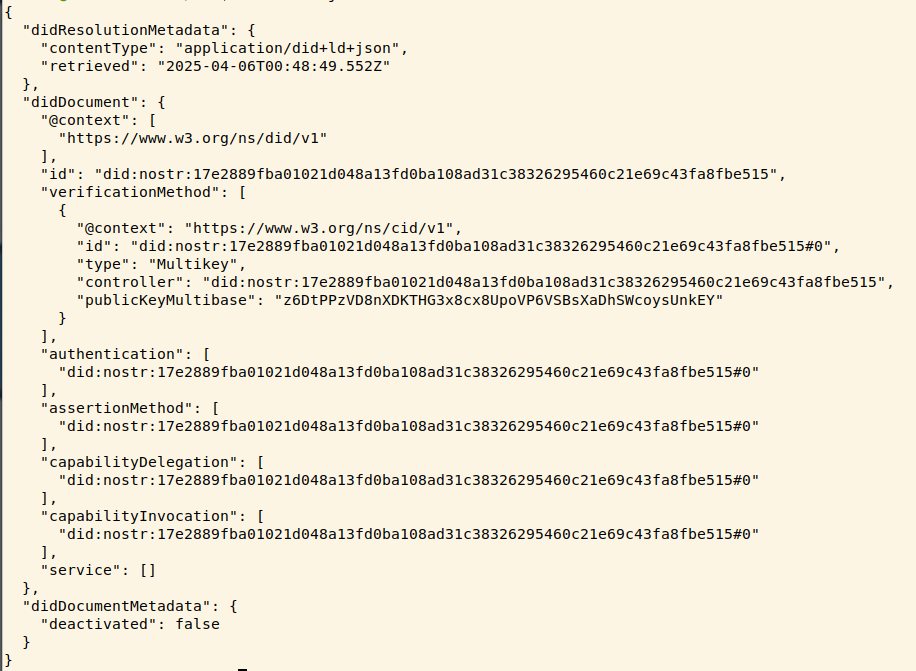

"What I want to show here, is that without Nostr users having to run a new client, or publish a new event for an DID Document, this DID Document is constructed from existing data on Nostr relays. The magic happens in the bootstrapping, which is all about kind:10002."

Read more:

Medium

Scaling Nostr

My story with Nostr began in 2022, with a few posts and direct messages between me and my friend Jonny. Those messages are still stored on…