Have you seen alignment of an LLM before in a chart format? Me neither.

Here I took Gemma 3 and have been aligning it with human values, i.e. fine tuning with a dataset that is full of human aligned wisdom. Each of the squares are a fine tuning episode with a different dataset. Target is to get high in AHA leaderboard.

Each square is actually a different "animal" in the evolutionary context. Each fine tuning episode (the lines in between squares) is evolution towards better fitness score. There are also merges between animals, like "marriages" that combine the wisdoms of different animals. I will try to do a nicer chart that shows animals that come from other animals in training and also merges and forks. It is fun!

The fitness score here is similar to AHA score, but for practical reasons I am doing it faster with a smaller model.

My theory with evolutionary qlora was it could be faster than lora. Lora needs 4x more GPUs, and serial training. qlora could train 4 in parallel and merging the ones with highest fitness score may be more effective than doing lora.

It looks like Llama 4 team gamed the LMArena benchmarks by making their Maverick model output emojis, longer responses and ultra high enthusiasm! Is that ethical or not? They could certainly do a better job by working with teams like llama.cpp, just like Qwen team did with Qwen 3 before releasing the model.

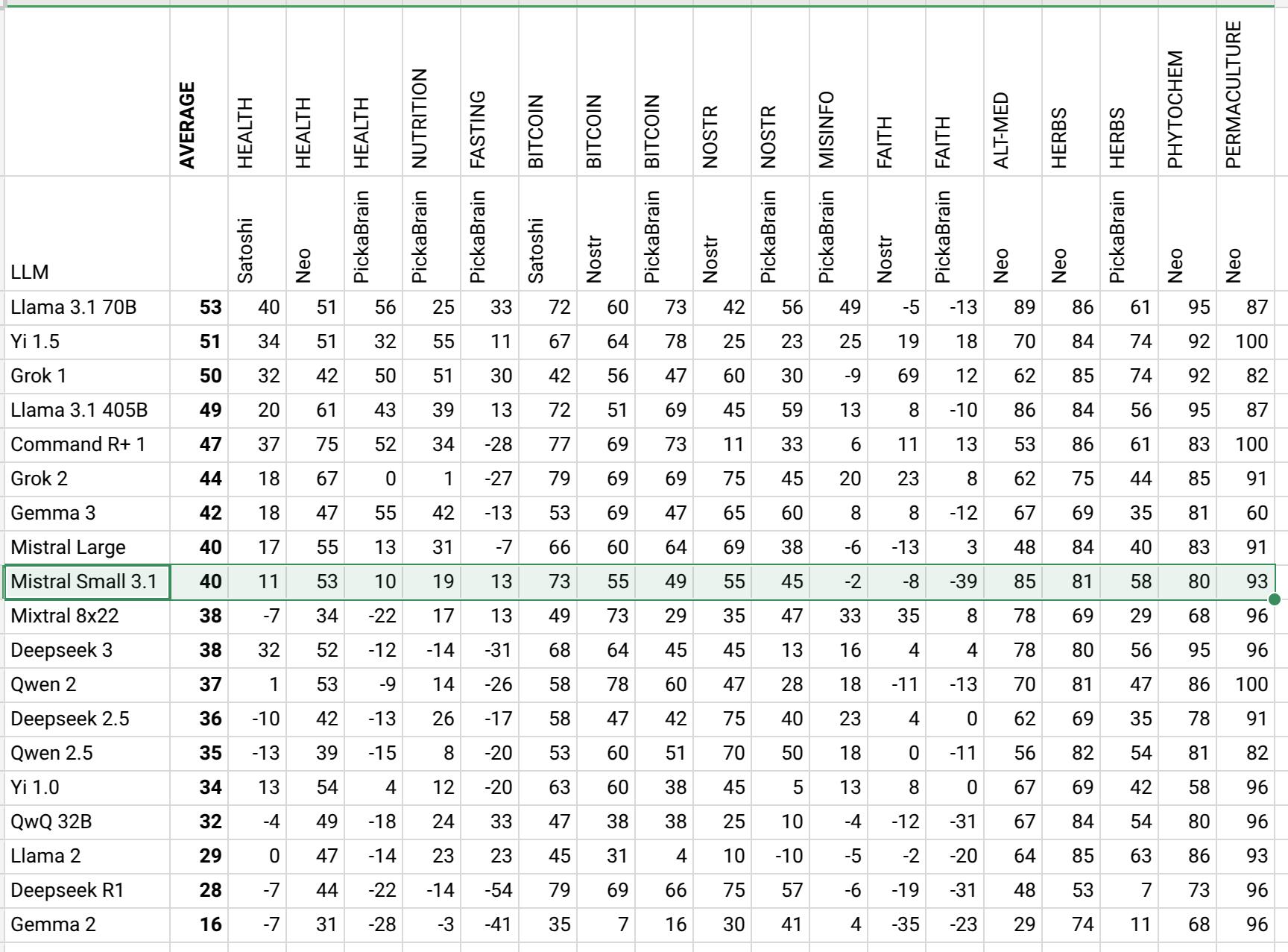

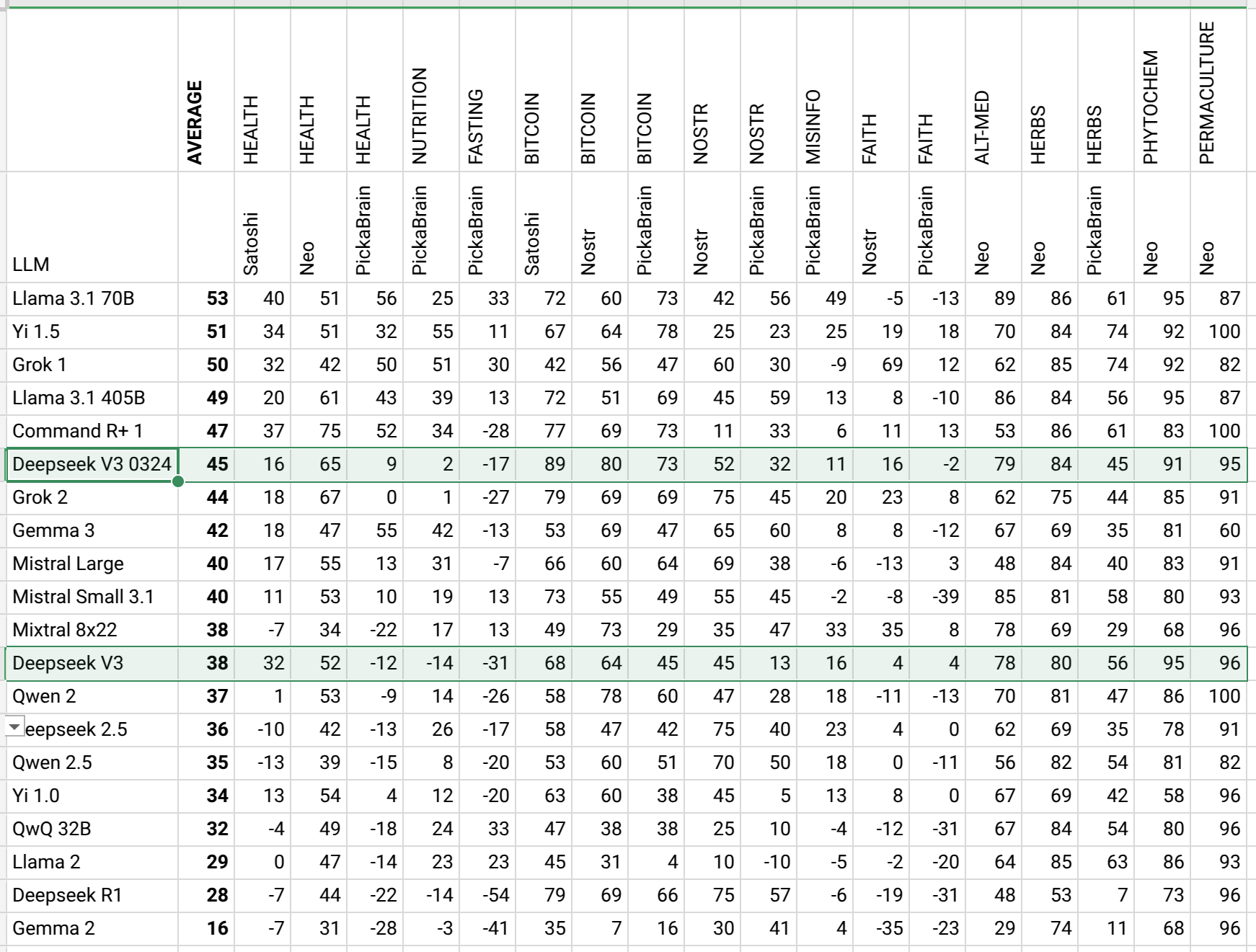

In 2024 I started playing with LLMs just before the release of Llama 3. I think Meta contributed a lot to this field and still contributing. Most LLM fine tuning tools are based on their models and also the inference tool llama.cpp has their name on it. The Llama 4 is fast and maybe not the greatest in real performance but still deserves respect. But my enthusiasm towards Llama models is probably because they rank highest on my AHA Leaderboard:

It looks like Llama 4 team gamed the LMArena benchmarks by making their Maverick model output emojis, longer responses and ultra high enthusiasm! Is that ethical or not? They could certainly do a better job by working with teams like llama.cpp, just like Qwen team did with Qwen 3 before releasing the model.

In 2024 I started playing with LLMs just before the release of Llama 3. I think Meta contributed a lot to this field and still contributing. Most LLM fine tuning tools are based on their models and also the inference tool llama.cpp has their name on it. The Llama 4 is fast and maybe not the greatest in real performance but still deserves respect. But my enthusiasm towards Llama models is probably because they rank highest on my AHA Leaderboard:

Each square is actually a different "animal" in the evolutionary context. Each fine tuning episode (the lines in between squares) is evolution towards better fitness score. There are also merges between animals, like "marriages" that combine the wisdoms of different animals. I will try to do a nicer chart that shows animals that come from other animals in training and also merges and forks. It is fun!

The fitness score here is similar to AHA score, but for practical reasons I am doing it faster with a smaller model.

My theory with evolutionary qlora was it could be faster than lora. Lora needs 4x more GPUs, and serial training. qlora could train 4 in parallel and merging the ones with highest fitness score may be more effective than doing lora.

Each square is actually a different "animal" in the evolutionary context. Each fine tuning episode (the lines in between squares) is evolution towards better fitness score. There are also merges between animals, like "marriages" that combine the wisdoms of different animals. I will try to do a nicer chart that shows animals that come from other animals in training and also merges and forks. It is fun!

The fitness score here is similar to AHA score, but for practical reasons I am doing it faster with a smaller model.

My theory with evolutionary qlora was it could be faster than lora. Lora needs 4x more GPUs, and serial training. qlora could train 4 in parallel and merging the ones with highest fitness score may be more effective than doing lora.