Venice.ai and select the llama3.1 model. Great option for a big model that you can’t run locally.

Otherwise a local llama3.1 20B is solid if you have the RAM

Login to reply

Replies (3)

Very helpful! Investigating both for work where we have great hardware and for home so this is great to know

local grow! t-y Guy Swann

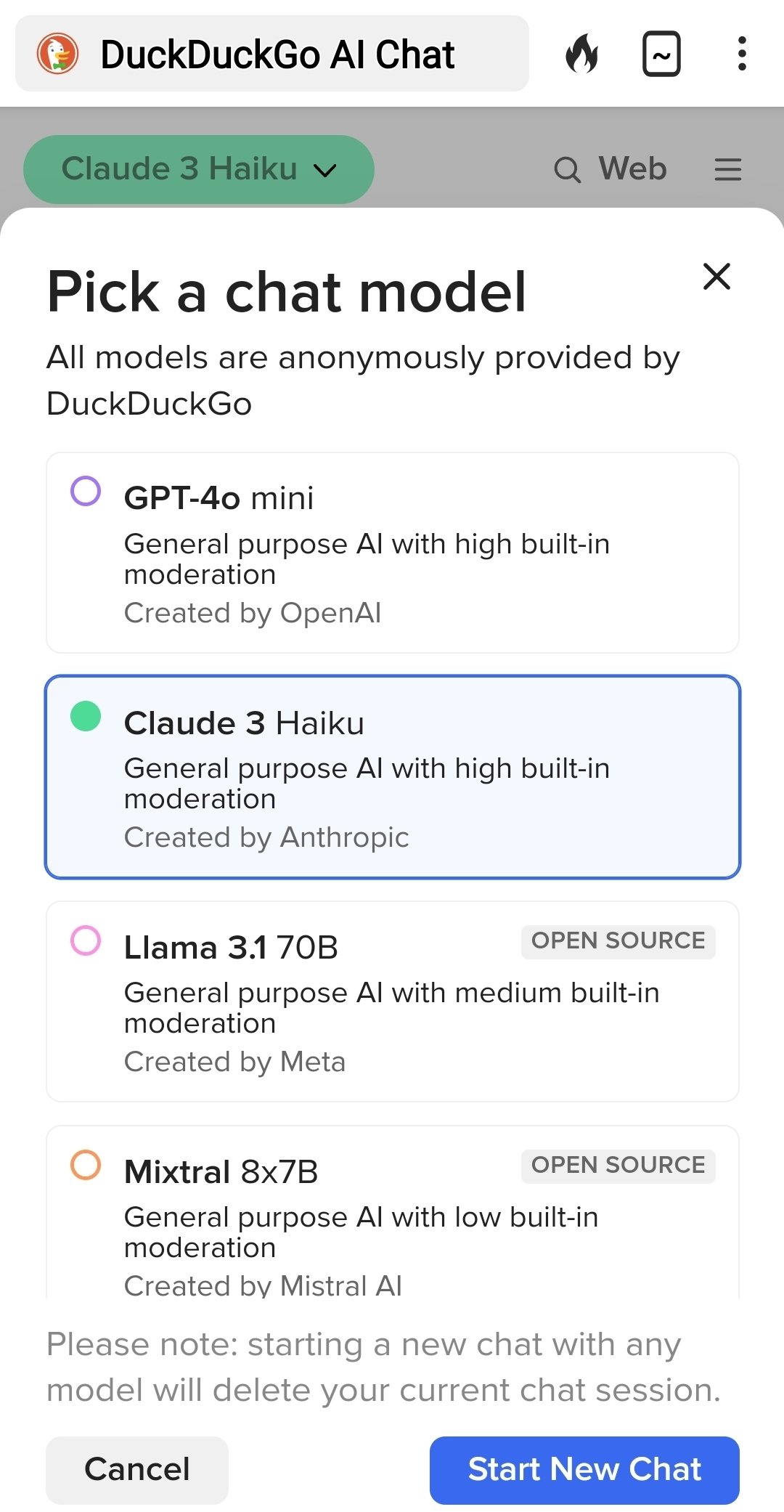

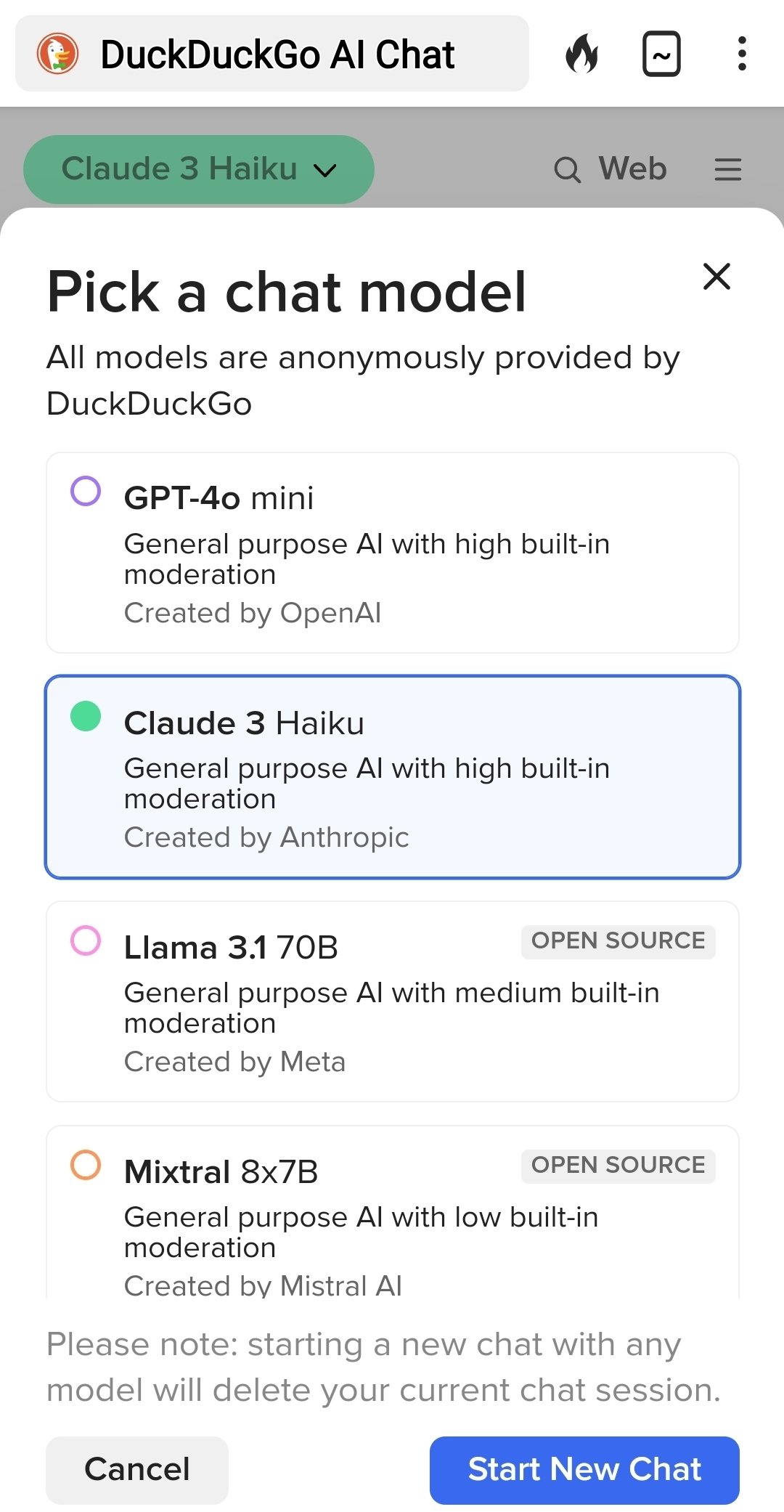

Duck Duck Go has a free AI chatbot that has Llama 3.1 70B option. I use it all the time, but Venice also does images, right?