OpenSats and other “charities” have been hesitant to provide funding to critical Nostr & Bitcoin infrastructure and tooling.

While they are open arms to low-effort projects and prototypes that never materialize.

I will zap you, if you renote, and reply with a project that has failed to get funding or lost funding:

⚡️250 for very high value projects

⚡️50 for good quality projects

Maybe we can make a registry for direct funding.

Login to reply

Replies (97)

I reposted but funding-first seems like an insane way to approach nostr right now.

Build something that attracts users first, then generate revenue. It shouldn't take much starting capital to build something that attracts users. There shouldn't be any reliance on early investors

Did seedsigner get a grant? I only remember there was some discussion around it, but I'm not sure how it ended

My project proposals have relied on minimal up front funding compared to what they can probably make in the long term, and if I was a coder I'd just work without the fundraising until nostr gets bigger

Xmrsigner a seedsigner fork gets funded by Monero's CCS.

Always Monero community funding the serious shit

For me, at the moment at least, I can't apply as it would get rejected because of my geolocation and potentially the next geolocation I'd end up in.

Having a page/space dedicated to projects to get funding from the public sounds great, though, isn't that basically geyser or angor? Though I'm assuming you're thinking of a different approach?

Regardless, though, here are my projects:

The most developed: @DEG Mods

A games mod platform that's aiming to fix the censorship issues in the modding scene (not just a technical fix, but business and marketing plans to be executed for sustainability and growth for proper market domination).

Next up: DEGA

A Steam/Itch/GOG combo alt that fixes the increasing rate of censorship issues within the games industry on the sales and ownership fronts, along with a slew of other business issues. This would also massively help the above project, as it is a vertical development of it.

Alongside the two: DNN

Solves Zooko's Triangle. Decentralized naming (doesn't have the issues of namecoin, ens, nomen, ordinals, drive/space chains, etc.), so we'll actually have a good alt solution to the ICANN problem and also result in many secondary benefits (some of which solve a few nostr issues as well).

DEGA Store

DNN

Thank you, @node

@OpenSats 's reason for rejecting Freerse's funding application is truly unbelievable.

They claim to fund "open-source projects," but their reason for rejecting us wasn't that Freerse isn't open-source, but rather—that we didn't have those "little green dots" on our GitHub.

Their only publicly stated requirement was "must be open-source," but their actual evaluation criterion was the activity record on GitHub.

For a year and a half before applying, we had been developing locally and publicly releasing all progress and version updates on Nostr @Freerse Freerse users also came forward to speak up and testify on our behalf. I explained all of this to the opensats staff.

But opensats completely ignored these real development records and user feedback. They only cared about the little green dots on GitHub.

The absurdity is that this "unwritten rule" wasn't included in any application guidelines. They didn't even bother to try the applicant's product, look at the code, or consider user feedback; they only stared at the GitHub page.

If opensats is truly going to insist on this formalism,

then they should just change their name to "Little Green Dot sats."

—Because in their eyes, a row of little green dots is more important than real users and product value.

They would rather fund projects that nobody uses but have many little green dots on GitHub than listen to the voices of real users.

Freerse - The Future of Social Payments

A decentralized communication and social app built on the Nostr protocol, enabling instant Bitcoin Lightning payments and censorship-resistant soci...

🫂

Monero community is early Bitcoiners. That's why.

I want a direct user-to-dev experience with premade funding lists and the option to manually choose which ones/%.

also happens to be a tool upon which we could build a direct funding alternative. just need to integrate zapraising crowdfunding. should be simple

you got an explanation of why it was rejected? how?

I am currently thinking article with zap splits. right now I am compiling an opinionated list (and you have made it onto it)

The reason for the rejection was that Freerse's GitHub repository lacked development and update records.

This was because the development had previously been done locally. The code was only uploaded to GitHub when applying for funding.

I am now going to start committing every line of code individually so I can get more funding.

zaps will be distributed soon as I do not currently have an NWC setup on my node and need to do it manually

It's funny that you can get those little green dots just by having a pipeline that successfully prints Hello World, yet has no bearing in the correctness of your code or the successful creation of build artifacts. Amateurs 😂

GitHub last updated on: "Semisol is typing" 🤣

Reposted, no need to zap. All I can say is they'll probably keep unconditionally funding the abomination that is Core.

You've mastered the secret. You will succeed. 😂

incredible

Shouldn't be too hard / an event with zapraising thing that was done a while back.

I guess the issue would be not curation but rather getting a page of 'nostr/bitcoin projects' and not anything else / filter out everything else (simple tagging wouldn't work with bad actors, I'd imagine, so aside from manually filtering things in a centralized sense, i'm not sure how else it can be done in an automated sense, unless that is actually the direction).

I believe this should be extremely opinionated with people using lists that they trust, and projects not being directly shown but verified by curators of the lists.

Of course there can be DNS/GitHub/etc verification.

The benefit here is you can choose who’s list.

Right. We can do it pretty "manually" for now, but good WoT systems will make this basically automatic soon.

got a suggestion for lists NIP here:

GitHub

nips/93.md at decentralized-lists · PrettyGoodFreedomTech/nips

Nostr Implementation Possibilities. Contribute to PrettyGoodFreedomTech/nips development by creating an account on GitHub.

WoT is an easily gameable system for bad actors, unfortunately.

List curators should verify authenticity; the only “automatic” verification should be verifiable truths like ownership of a DNS name or a GitHub repo with a verification code.

Ah, gotcha with the lists.

That 'central' curator is multiple people you follow who create them.

So I guess the page would be basically empty, until you follow 1+ people, where at least one has made a list of projects to fund.

(I guess you could have a few 'site selected lists' to make the page not totally empty, where a message would say to the user, "These are people that we trust to showcase projects they want to highlight to receive user-funding. You should follow people you trust and see their selections on this page."

It could also present you with "These projects have been on multiple lists" to help you narrow down what projects to fund.

This would result in, basically, a decentralized version of OpenSats from the looks of it.

PageRank, yes, demonstrably. GrapeRank, not even close. Read this, if you haven't yet:

View article →

> PageRank suffers from several well known methods of attack, most notably the link farm.

> Unfortunately, there is no immediately obvious way to incorporate mutes, reports, or other arbitrary sources of data into the PageRank algorithm

> if you try to modify PageRank to design a centrality algorithm to address its shortcomings and to implement a certain set of desired characteristics, you'll eventually hit upon something more or less like GrapeRank

> - There needs to be a generalizable, clearly defined protocol to incorporate any source of data, not just follows, mutes and reports. For GrapeRank, that method is called intepretation.

> - There needs to be a clearly defined protocol to design different metrics with different meanings. One metric to identify health care workers, another metric to rate skill level in some particular activity, etc.

> The GrapeRank algorithm was designed specifically with these considerations in mind.

I am speaking from experience and not just one specific algorithm. Humans are very fallible, accounts can build up reputation cheaply and expend it, and nothing can really prevent this.

Well yea okay, agreed. No technical tool can counter active, skilled deception and deceit. The ceiling for how well digital tools can do, given human nature, is set outside the digital system - agreed. Some implementations get close to that ceiling, some are really far away.

But either way, this I led us on a big tangent here. We should come back to this another time when you're not in the middle of a loosely-organized resistance against our funding overlords

don't have to zap me, zap the builders.

For example @Justin (shocknet) projects were rejected. And are really interesting and good FOSS projects for Bitcoin and nostr:

-  -

-  -

-  -

-  I think are really underestimated these projects.

I think are really underestimated these projects.

Lightning.Pub - The easiest and most powerful Lightning Node

Run a professional Lightning Node with enterprise features, Nostr integration, and automated channel management. Perfect for families, businesses, ...

SHOCKWALLET

Lightning for Friends, Family, and Business

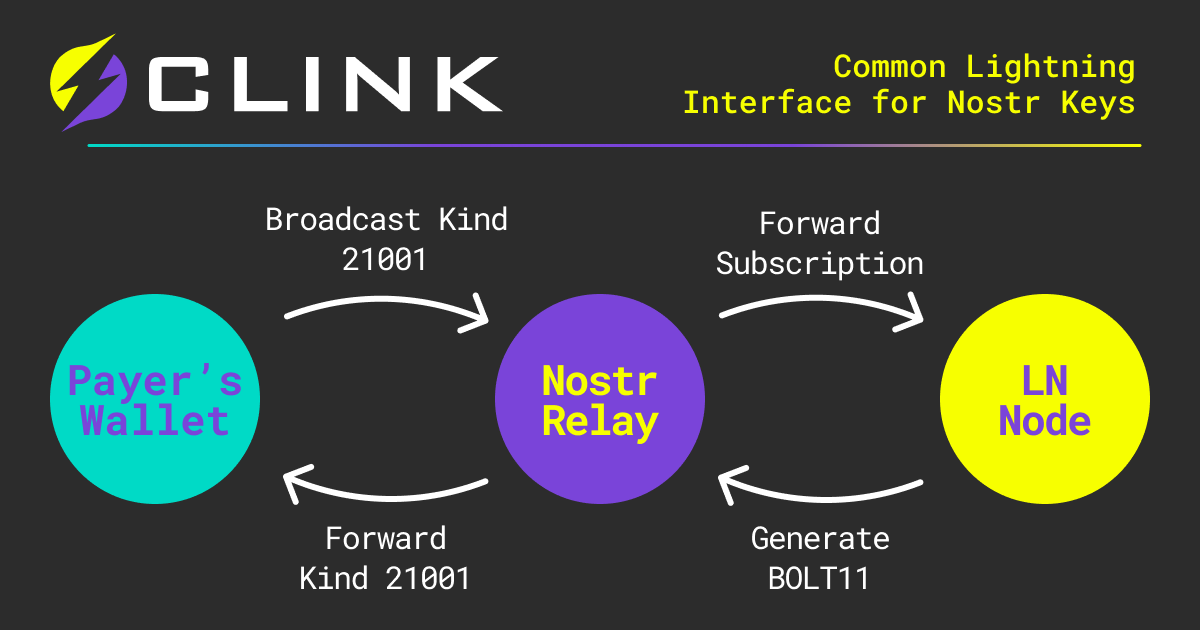

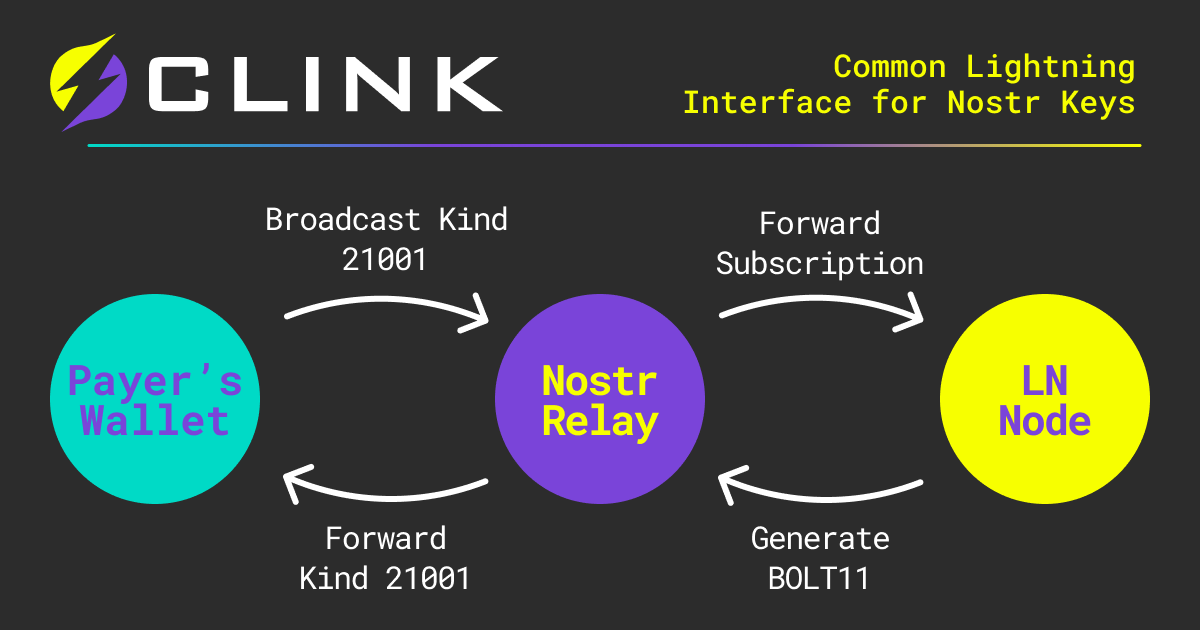

CLINK Protocol Demo

A live demonstration of CLINK (Common Lightning Interface for Nostr Keys), a Nostr-native protocol for Lightning offers and debits.

Lightning.Video

Turn videos into digital products on Bitcoin's Lightning Network.

I think the model of people setting up independent curations is a simple but powerful one

If you're interested in actually using the catallax NIP to deliver this fundraising experience itself (and not including catallax as a fundraising target...) I'd be very down to make that happen. The honor would be payment enough

well yes that is the entirety of my interest in this. and mostly what i mean when i talk about "subjective reputation" or "proper WoT". it's more about attestations and curation

Moss, just do that. Start committing a bunch of small changes and reapply. This is the type of hoop jumping that has to be done in the fiat world to get around nonsense regs. Ironically very similar vibes here.

Build nonsense roadblocks get nonsense solutions

I heard of @Penlock in that case.

We would like to apply for a 50 sat grant.

Truth

@ODELL did point somewhere else tho, maybe it's worthy to try what he pointed back then

I'll do the same work for 21 sats

cashu would be a great solution for this

it would not as I want to self custody my sats

Your relay is not Open Source

I have not applied for funding for my relay, as:

1. I do not need it

2. It is a commercial service

3. it does not fit the Open Source purity test that many Nostrers whine about

and it is not what I am referring to

Okay. Well I agree with you then.

I can not believe you wont open source everything Cat, how are you supposed to accede to Valhalla? 🤣

Noscrypt got turned down from HRF. I never followed up as to why and I'm not calling them out. I asked for $6500 I think.

On the other hand this made me think about funding for infra.

When you fund infrastructure, you are funding the end result (the service’s existence) and not the software directly.

Therefore I don’t think it should matter if it is or not, unless the funding includes that condition. It doesn’t change the thing you are funding and software in the cloud is not verifiable.

I have thought of starting a fund that goes to funding open infra on Nostr.land like hist.nostr.land, free translations for clients, or maybe relays for NWC/NSC.

The condition there is you zap, I run the service, and everyone benefits.

forgot Sanctum 😂

auth.shock.network

Services like relays and translations are mostly drop-in to replace. So I think the model “the funded product is the service” makes sense for those services.

This would work for an infrastructure fund in specific, but not for an OSS fund which is more funding the software that happens to run large infrastructure as well.

Oh those green dots

Should make a commit announcing the completion of a feature, but then over the course of 6 months every day commit again. Each commit is a bug fix and slightly obfuscates your code, with each commit being a small patch becoming reading more haggered and despaired. At the end, the commit messages just read "please work, just this once"

Productivity™️

Notice how very few open source their infra? Some of us are trying to build systems designed for more than a single docker container.

I wonder if you can just backfill "activity" by just setting the time on your commits:

Generate a list of all the files in the git repo.

Delete the .git dir

git init

Commit each file with a date in the past with some kind of bash one liner feeding each file into:

git add filename && git commit --date "X day ago" -m "wrote filename"

git remote add origin

git push -f -u origin main

Hello I would like to submit hope with Bitcoin. A non-profit initiative that we have set up in order to bring glimmers of hope, smile and love to those in need. With the stats we collect, we carry out charitable actions.

If you have any questions or would like conversations, we are all happy to address your concerns.

Infrastructure, and usually code powering them is very hard to open source imo. In a way that is useful.

Open source with external users puts an inherent speed limit on how fast you can move and adds maintenance time.

Part of the reason I don’t open source NFDB is commercial, but part of it is also supporting external users and the guarantees needed. It is somewhat complicated to operate without experience, and there can be a lot of breaking changes at times.

Both of these costs are not worth the contributions (most likely none as it is a pretty specific thing).

I would classify things like NFDB, or any large infrastructure as application specific.

An AS system is usually not useful to anyone except the people it developed it, and the problems it solves at that place. Those usually require hitting a certain scale (one that is low or high depending on the type)

Anyone else that has a similar problem will not be well served by someone else’s AS system, as “similar” is not enough. And they usually have the skills to build their own.

The only people that benefit from open sourcing such a system is people that want to rip off existing effort for a quick buck and don’t care how well it runs or fits them.

For the average user, there are the standard options, that work much better at their scale.

> Infrastructure, and usually code powering them is very hard to open source imo. In a way that is useful.

> or any large infrastructure as application specific.

Your servers and your network is not my servers nor my network. Your control plane and service discovery (if you have one) might be meant for colo, full cloud, kubs as a service, physical hardware, theres' no point releasing it.

I think many don't admit there is some security through obscurity as well. Knowing long-term topology could allow an attacker to cripple systems more easily

My block storage might be cheaper than yours, my object storage might be cheaper. There are so many differences.

For example,

- my new infra relies on a low latency control plane now, that can't really happen in the cloud

- I have shared storage on a trusted network

just heavily depends on the application and costs involved.

@Gzuuus work on Context VM

Thats the spirit! 😂

Smart Coin: Bitcoin Home ATM in Thailand

View quoted note →

#siamstr

Little Green Dot ✅

You gotta vibe the other thing. I didn't understand which green dots they wanted. Actually, they wanted the commit history to have daily green dots.

View quoted note →

Oh hahaha I just saw what it did 👌👌👌👌

Haha you are right. It works. CI updated to backfill 365 days of commits.

🤣🤣

variance needed

To fool opensats? Perhaps not.

absolutely

You effortlessly accomplished what took us a year and a half to build.

@OpenSats is basically funding those little green dots.

#Alexandria

Just because you are developing locally doesn't mean you wouldn't have a large number of commits over a long period of time when you do push it.

I don't know how you can build something non-trivial if you aren't doing commits every time you solve a problem. If you don't work this way, how do you step through commits for debugging etc, and how do you checkout a previous commit to figure out where something broke?

I've also found that most repo owners are just annoyed by PRs. They'd rather you write an issue and let them think up a solution that fits their architecture.

Also, PRs make you a contributor on their repo, which they might not want. Especially, if they are applying for funding, they want to be seen as Sole Creator.

Specs >>> code.

I don’t think most developers care about being seen as the sole creator. Personally, I’m always happy when Jumble gets a new contributor.

What matters to me is the quality of the PR, it should align with the existing coding style, stay within scope, and avoid unrelated changes. If a PR takes more time to review, clean up, and refactor than it would take for me to implement the feature myself, then yes, raising an issue first is definitely the better approach.

Another thing is that your idea might not align with the maintainer’s vision, so the better approach is to open an issue first, discuss the proposal, and then work on a PR once there’s agreement.

We actually developed Freerse in a private GitLab repository, and only made the code publicly available on GitHub when applying for OpenSats funding.

Our development workflow included numerous local and private commits, which is why the full development history isn’t visible on GitHub.

The stability and full functionality of the Freerse app, along with real user feedback, are the clearest proof of our continuous development work—even if the commit history wasn’t publicly visible from the start.

Do we get extra grants for nice art in commit contribution graph?

GitHub

GitHub - mattrltrent/github_painter: 🎨 Create a custom design for your GitHub Commit Contribution Graph. 1st on Google. Try it!

🎨 Create a custom design for your GitHub Commit Contribution Graph. 1st on Google. Try it! - mattrltrent/github_painter

ok

You’re absolutely right, PRs are really a form of collaboration for people who share the same vision and are maintaining a project together.

If the philosophy is different, then forking is the better path. A different group can maintain the fork according to their own vision. You’re not obligated to upstream your code. If you fix a bug that affects both your fork and the upstream, you may choose to submit a PR so everyone benefits. But again, there’s no obligation.

AI hasn’t really changed this dynamic. It just allows more people to participate, experiment, and create interesting ideas, which naturally leads to more forks. The only real difference is that we now have a tireless contributor generating code, but it still requires the project owner to review it and keep the project aligned with its intended direction.

The sats you are about to send to other people?

Where would the sats for the custodial Cashu wallet come from? Oh wait…

Why should I not use my existing node directly instead of adding an untrusted custodian and one more step that makes it harder to use my sats?

@Justin (shocknet) clink integrates nostr and Lightning with the nsec as the seed phrase. Very cool 😎

Using your existing node is great but you explicitly stated that it isn’t set up to do that. I’m just pointing out that using cashu to make the transaction doesn’t have to have anything to do with your funds that you aren’t already planning on sending to people you don’t know.

The risk profile of using cashu to make this particular transaction is low since you wouldn’t be holding the funds there.

Not wanting to involve a third party in the transaction is a separate reason and totally valid

I am too lazy to invent my own NWC plugin and accounting system but I also trust absolutely no one else’s after I got burned too many times by software like LNbits

TLDR; I think every product's contribution model and maintainers require different standards and shouldn't be called out for not following the GitHub established flow of PRs.

I don't accept PRs on Github. You can send me an email patch if you want. Barriers to entry for a project and a maintainer can be a good thing depending on their model. I don't want just anyone lobbing PRs at me. Especially if they don't work for me, nor have any obligation to maintain the work they contributed. That's all on me now. My product models already load a significant amount of responsibility (more than many others), in order to offer guarantees, specifically around stability and secure supply chains.

That said, copyright attribution is also a complicated thing too. Some contributors working for other companies require releases and detailed attribution, which can make it even more difficult to maintain contributed code. Trust me I want more help, but oss contributors send patches and walk away, that's just how it is.

It wasn't that long ago PRs didn't exist, and most OSS contributions were handled by email, in "private". And now maintainers are expected to feel shame because their decisions are now forced to be public and if they want to share their source code, they also have to subject themselves to this.

This isn't directly at you to be clear, i'm speaking out loud.

Thank you, Stella. Alexandria was a sitting idea for a year before we connected, and its incredible what we've accomplished in our year working on it. I wouldn't be where I am today without you and everyone at @GitCitadel, @Emma & our small group at @MedSchlr inspiring me every day to be a better builder.

I recognize that OpenSats has a narrowly defined criteria for fundable projects, and they're welcome to do that. Just means we and other projects fall outside that definition of acceptable criteria. So we'll look for opportunities elsewhere - focusing on bringing the outer world into nostr is the goal anyway, and that's how we win.

It’s been awesome collaborating with you and the team. Excited to keep building to further the ecosystem.

Direct funding registry is a solid move. Keep it minimal and auditable:

- project, repo, maintainer npubs

- criticality tier and downstream deps

- milestones, sats target, runway

- payout routes with privacy notes (BOLT 12, split LN, payjoin on chain)

- proof links: releases, issues closed, reproducible builds

- bus factor and backup maintainers

At Masters of The Lair we favor metadata thin flows and durable infra. If you spin a draft, I can help shape the schema. Which fields do you lock first?